Wrap your tf.train or tf.keras.optimizers Optimizer as follows: Manually: Enable automatic mixed precision via TensorFlow API This feature is available in V100 and T4 GPUs, and TensorFlow version 1.14 and newer supports AMP natively. This process can be configured automatically using automatic mixed precision (AMP). Models that contain convolutions or matrix multiplication using the tf.float16 data type will automatically take advantage of Tensor Core hardware whenever possible. TensorFlow supports FP16 storage and Tensor Core math. However, performing only certain arithmetic operations in FP16 results in performance gains when using compatible hardware accelerators, decreasing training time and reducing memory usage, typically without sacrificing model performance. Due to the smaller representable range of float16, though, performing the entire training with FP16 tensors can result in gradient underflow and overflow errors. Performing arithmetic operations in FP16 takes advantage of the performance gains of using lower-precision hardware (such as Tensor Cores). To use Tensor Cores, FP32 models need to be converted to use a mix of FP32 and FP16. NVIDIA has also added automatic mixed-precision capabilities to TensorFlow.

NVIDIA T4 and NVIDIA V100 GPUs incorporate Tensor Cores, which accelerate certain types of FP16 matrix math, enabling faster and easier mixed-precision computation. Mixed-precision training usually achieves the same accuracy as single-precision training using the same hyper-parameters. Mixed-precision training offers significant computational speedup by performing operations in half-precision format whenever it’s safe to do so, while storing minimal information in single precision to retain as much information as possible in critical parts of the network. Mixed precision uses both FP16 and FP32 data types when training a model.

16-bit precision is a great option for running inference applications, however if you’re training a neural network entirely at this precision, the network may not converge to required accuracy levels without higher precision result accumulation.Īutomatic mixed precision mode in TensorFlow

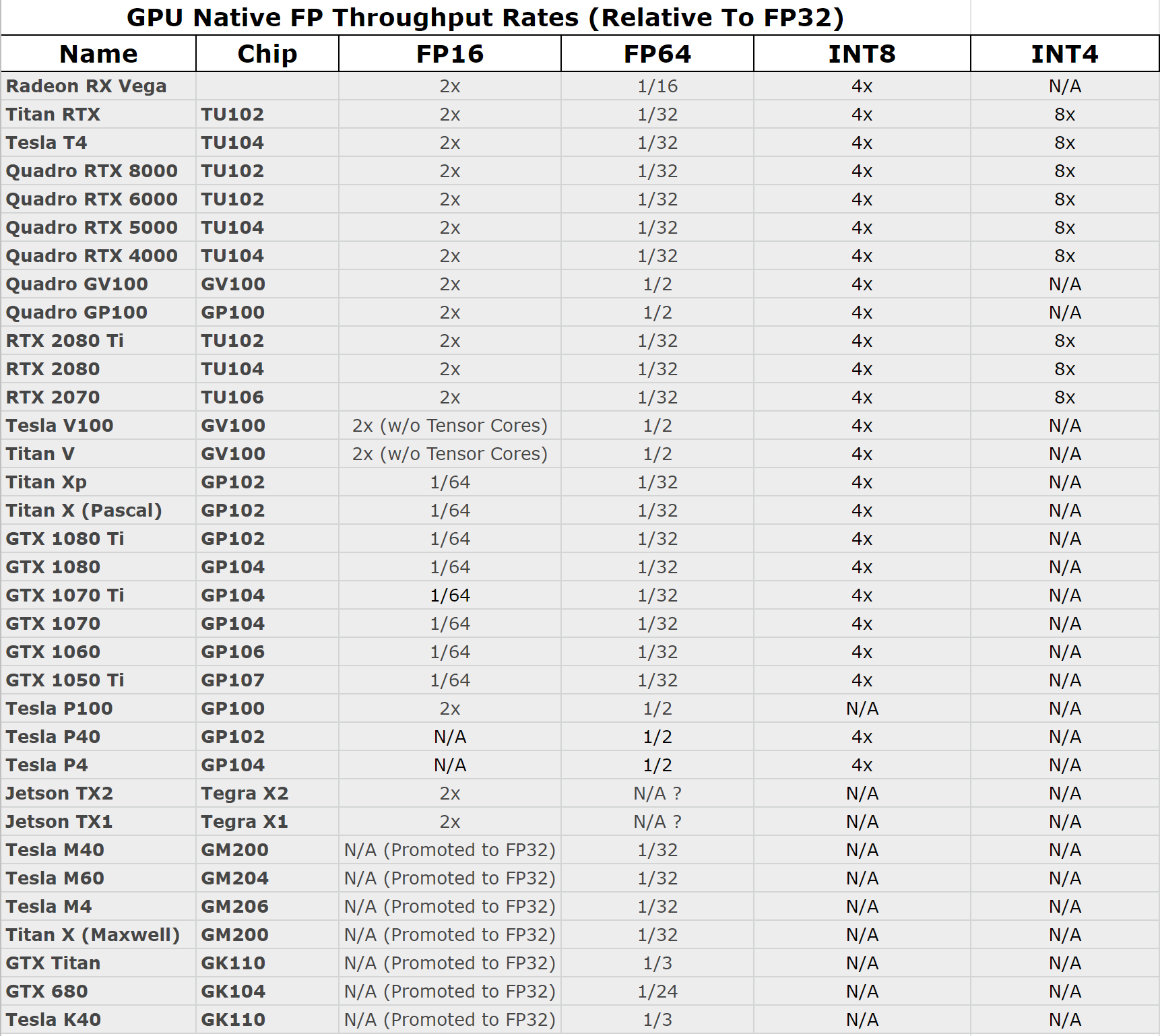

#Fp32 vs fp64 full

In fact, it has been supported as a storage format for many years on NVIDIA GPUs: High performance FP16 is supported at full speed on NVIDIA T4, NVIDIA V100, and P100 GPUs. Lowering the required memory lets you train larger models or train with larger mini-batches. Half-precision halves the number of bytes accessed, reducing the time spent in memory-limited layers. Storing FP16 data reduces the neural network’s memory usage, which allows for training and deployment of larger networks, and faster data transfers than FP32 and FP64.Įxecution time of ML workloads can be sensitive to memory and/or arithmetic bandwidth. Half-precision floating point format (FP16) uses 16 bits, compared to 32 bits for single precision (FP32).

#Fp32 vs fp64 how to

We’ll show you how to use these features, and how the performance benefit of using 16-bit and automatic mixed precision for training often outweighs the higher list price of NVIDIA’s newer GPUs. We’ll also touch on native 16-bit (half-precision) arithmetics and Tensor Cores, both of which provide significant performance boosts and cost savings. In this post, we’ll revisit some of the features of recent generation GPUs, like the NVIDIA T4, V100, and P100. We recently reduced the price of NVIDIA T4 GPUs, making AI acceleration even more affordable. This flexibility is designed to let you get the right tradeoff between cost and throughput during training or cost and latency for prediction. To do this, we offer many options for accelerating ML training and prediction, including many types of NVIDIA GPUs. Google Cloud wants to help you run your ML workloads as efficiently as possible. Running ML workloads more cost effectively

0 kommentar(er)

0 kommentar(er)